Silicon has aged. The development of computer microprocessor (CPU) chips based upon silicon wafer technologies has increased in power according to Moore’s Law for almost sixty years now since it was first posited in 1965. But as true as this law has (mostly) proven to be, we all knew that it had a shelf-life, a half-life even. There would inevitably come a time when we couldn’t cram any more transistors onto a chip and so we went through a period of combining CPUs in parallel and also reaching into the newer world of Graphical Processing Units (GPUs), which we still do today, especially in relation to cloud computing.

But other technologies have been developed that work in this space.

All Photonics Network (APN)

Determined to change the way we use computing devices with a series of developments that stem from base infrastructure levels but manifest themselves in very tangible real world deployment scenarios is NTT. The company’s All Photonics Network (APN) is part of its Innovative Optical & Wireless Network (IOWN) concept alongside the company’s cognitive foundation and digital twin computing programs.

The change being brought about here is a question of data science, software engineering and electronics, all channelled through the practice of photonics. For those who skipped the photonics module at school, this branch of optics shares DNA with quantum electronics and essentially uses light as its medium. Through the generation, detection and manipulation of light photons, photonics is concerned with the emission, transmission, modulation, signal processing, switching, amplification and sensing of those light photons in order to control the particles and waves that light itself is composed of.

To put it another way, using light-based networking rather than silicon and electricity, photonics can give us 125 times more transmission capacity than traditional silicon and it is 100x more power efficient – and that’s ‘today’ i.e. this quite embryonic technology is still its ascendancy and may shape (pun intended, light travels in waveforms right?) the way computer systems are built in the next decade, or even the next half-century.

NTT showcase selection

Keen to demonstrate and (the company hopes) validate its technology propositions, NTT typically demos its latest developments across a series of so-called ‘showcase’ setups across its various R&D centers, one of which is in Sunnyvale with others located around the world including Tokyo. The company underlines what is apparently an annual $3.6 billion investment in technological and scientific research and development. Key to its current work is the above-mentioned Innovative Optical and Wireless Network (IOWN) initiative, a global communications infrastructure said to be capable of enabling high-speed, high-capacity Internet services utilizing optical technologies.

In the area of remote-control construction equipment monitoring, NTT has demonstrated one use case for the IOWN All-Photonics Network (APN), connecting on-site construction machinery with remote-control systems. The technology aims to improve issues facing the construction industry including worker safety, labor shortages and long working hours. Through high capacity and low delay, NTT is able to improve safety and efficiency, with plans to expand this technology to other working environments.

By transmitting images and other information at a construction site with low delay levels, operators in remote areas can accurately grasp the site conditions, making it possible to [comprehend, visualize and interpret the state of] an environment close to working at the site. In the future, the use of remote control of construction equipment is expected to improve the efficiency of operations, such as reducing the travel time of operators and improving safety by reducing dangerous work performed by people on site.

NTT also recently released a new white paper highlighting the development of what it calls its “Inclusive Core” architecture for the 6G/IOWN era. This architecture incorporates concepts such as In-Network Computing and Self-Sovereign Identity (SSI) into the core network, reducing terminal processing load and protecting privacy for services used over the network. NTT says it has successfully demonstrated this architecture using the metaverse as a use case.

These are the kinds of hardware and software innovations that NTT is already known for; the organization has developed an intelligent ruggedized ‘typhoon-tracker’ raft-canoe-bouy device capable of being sent into the eye of a storm at sea to track waves and weather patterns. That’s about as purist NTT as it gets i.e. applicable to the Japanese market and the rest of the world, super smart data-centric technology and making use of technologies that you didn’t even know existed. But the company has also been following the groundswell of generative AI (gen-AI) and Large Language Model (LLM) development with its own approach to the still-nascent technology that has positively disrupted so many enterprise software systems this year.

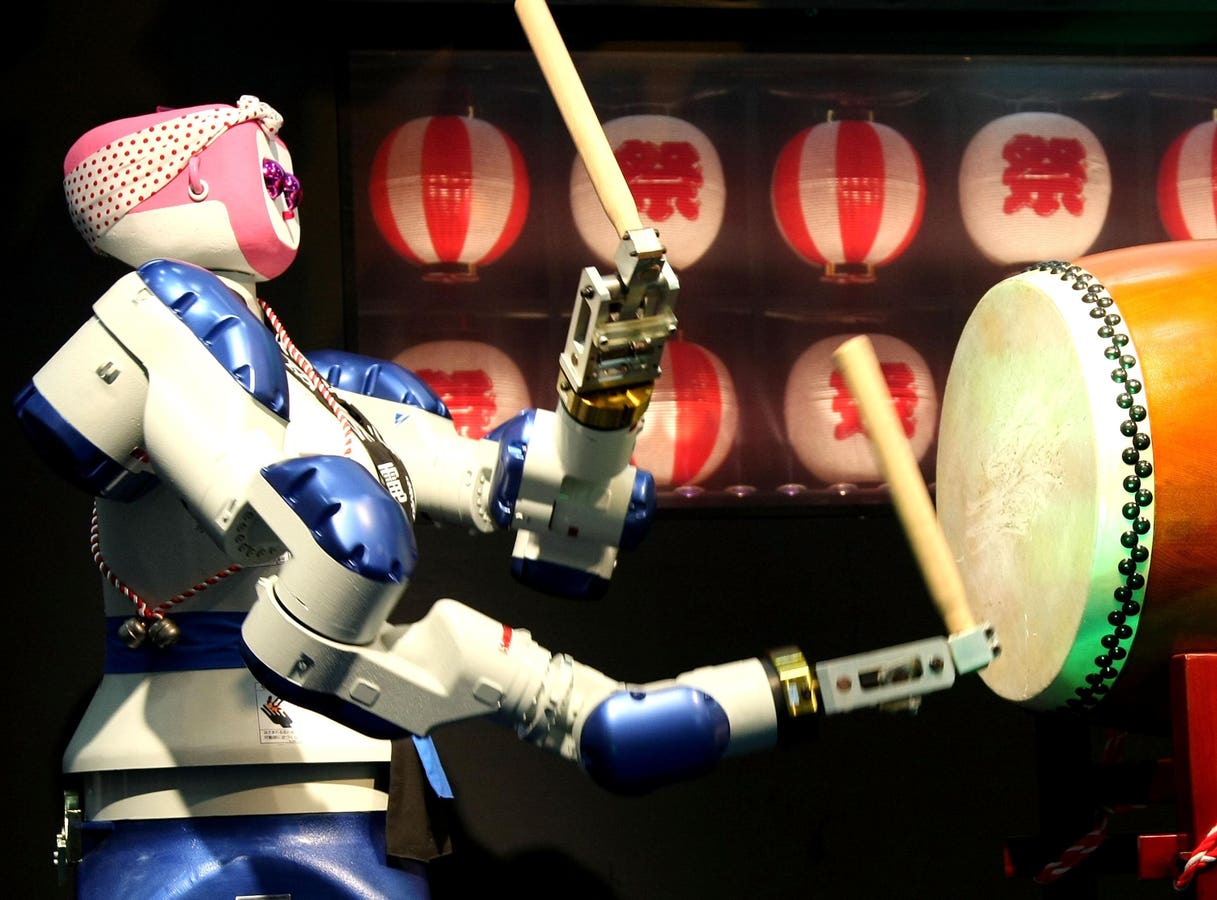

Enter, the ‘tsuzumi’ LLM, named after the traditional Japanese drum of the same name.

Bang the drum, ‘tsuzumi’ LLM

According to Akira Shimada, NTT representative director, president and CEO, the ‘tsuzumi’ LLM is characterized by its ability to be Japanese or English, by its customisability and by its ability to be capable of reading data in a wide variety of input modes – it is also called out for its high level of cost performance. It has English LLM capabilities that rival Meta’s own LLM and is claimed to operate faster than OpenAI’s work in this field. Plus, crucially, it takes up only 1/20th of the Graphical Processing Unit (GPU) capacity to make it work. It can read text and graphics and also understand voice tones. The company wants to direct it to solve social issues such as the again above-referenced world labor shortage.

Discussing the data loads that LLMs will need to be able to shoulder and ingest for training in the next decade and beyond, the NTT president and CEO has stressed the amount of work that the firm is doing in terms of making datacenter energy usage more efficient through its work in photonics. With another key factor being speed, photonics again comes into play as it can clearly reduce latency issues experienced with current application structures running on ‘traditional’ cloud services.

“Because APNs process light and require less energy, the IOWN mission is therefore to create a data-driven society that is carbon-neutral from first principles. In order to train tsuzumi, NTT built a collaborative cloud environment to train the LLM model,” said Shimada. Moving to discuss the medical field, Shimada reminded us that the NTT tsuzumi is ‘lightweight’ (a term used to denote a technology’s prudent and economic use of a smaller software code base) and agile so it can be placed in controlled remote environments where locking down data privacy is of paramount importance. This could be areas such as medicine with the sensitivity of needing to take care with Electronic Patient Records (EPR). With technologies stretching into the area of digital twins and the IoT close at hand, NTT says its focus is on a sustainable future with its R&D initiatives.

AI doesn’t need to know everything

“Because tsuzumi is lightweight it takes far less kilowatt per hour energy requirements to make it work,” said Shingo Kinoshita, senior vice president and head of R&D planning department at NTT Corporation, speaking in person to press and analysts this November 2023. “The intention with tsuzumi is not to make it an LLM for that ‘knows everything’ but instead to make it more specifically aware of language surrounding key use case requirements.”

This is similar to the concepts we have heard discussed when vendors talk about the move to develop so-called Small Language Models (SLM) or Private AI Language Models. Because of this LLM’s flexible construction models, it can acquire industry and organization-specific intelligence and benefit from its ‘adapter’ technology that enables the data engineers working with it direct fine-tuning to apply it to specific industry use cases, including mission-critical application types such as those found in financial markets.

Making sure the company worked with high-quality training data from the outset Kinoshita is bullish about the prospects for this technology. He explains how it can understand the sentiment expressed in a manager’s voice (to see if anger or aggression is detected) when delivering orders and instructions to employees. Kinoshita suggests that analysis of this kind could help identify points of stress in the workplace and so help create workflows that benefit businesses and employees in equal measure.

Given that we know NTT historically as a telegraph & telephone company (the clue is in the name with the TT element), there has clearly been an evolution in the organization’s purview, remit and overall market outlook since it was first formed just after 1950. In those 70-something years, NTT is obviously keen to be perceived as a cloud-data-AI purist alongside the Silicon Valley natives and the rest of the world. All that said, there are still levels of discernable tradition and heritage across the business, which in this tumultuous period of world, life and commercial change may not be a bad thing. If NTT could keep its branding but change the wording behind its logo, it might opt for ‘new technology tomorrow’… but then again, maybe it wouldn’t, not absolutely everything needs reinvention after all.

Read the full article here