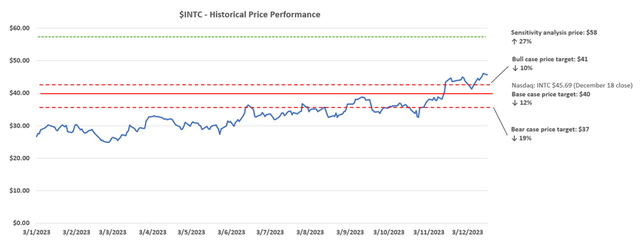

Intel’s stock (NASDAQ:INTC) has benefitted from a relentless rally since the beginning of November. And the stock has yet to lose momentum, despite the slight early December pullback in tandem with broader markets due to adverse labour market data.

Intel’s “AI Everywhere” product launch event last week has been a key reinforcement to its roadmap execution, preserving the stock’s recent gains. This has been further complemented by a macro-driven rally on the market’s rising optimism for impending rate cuts.

However, the most attention-grabbing “Gaudi 3”, Intel’s answer to burgeoning market demand for accelerator chips, received little airtime during the launch event. There was little disclosed about Gaudi 3’s specs and performance capabilities that investors do not already know. Management has stuck with the repeated narrative on how Gaudi 3 would be competitive to Nvidia’s (NVDA) best-selling H100, and reiterated their commitment to start shipping in 2024. Meanwhile, the newest 5th Gen Xeon “Emerald Rapids” server processor is also shipping into a modest data center CPU demand environment. In addition to the mixed macroeconomic backdrop, the industry is also combatting data centers’ gradual transition to accelerated computing needed in the AI-first compute era. This risks eventually overtaking CPU-based systems, which is a headwind to the demand environment for Xeon processors.

Instead, we believe Intel is carving out an edge with enhanced focus on AI PC opportunities through its latest “Core Ultra” client computing processors – or more widely known as “Meteor Lake”. The proprietary client computing CPU based on Intel’s latest Intel 4 process node, which also incorporates on-chip AI acceleration capabilities, also complements an improving PC market. Paired with a go-to-market roadmap that coincides with the holiday season, the Core Ultra PCs could potentially kick-off an upgrade cycle – particularly in the commercial spending segment.

Taken together, we believe Intel’s execution this year has been favourable to rebuilding its competitive advantage, and turns a page from its previous reputation for delays and obsolescence. Looking ahead, investors will likely increase focus on the extent of which execution is underpinned by direct fundamental improvements. This would be critical to reinforcing durability to the stock’s gradually narrowing valuation gap to its closest semiconductor rivals going forward.

AI in Data Centers

The AI Everywhere launch event was meant to draw focus on Intel’s re-established leadership across key computing verticals through its revamped product roadmap. The company had kicked off 2023 with the launch of its 4th Gen Xeon “Sapphire Rapids” processor, and now finishes the year by unveiling its latest 5th Gen Xeon “Emerald Rapids” processor. The biggest differentiating factor for the latest Xeon chip is its built-in AI acceleration capabilities. This substantially narrows the technology gap that Intel had previously struggled with closing in on against AMD’s (AMD) currently AI-optimized Genoa EPYC processors.

Competitive TCO and AI Capabilities

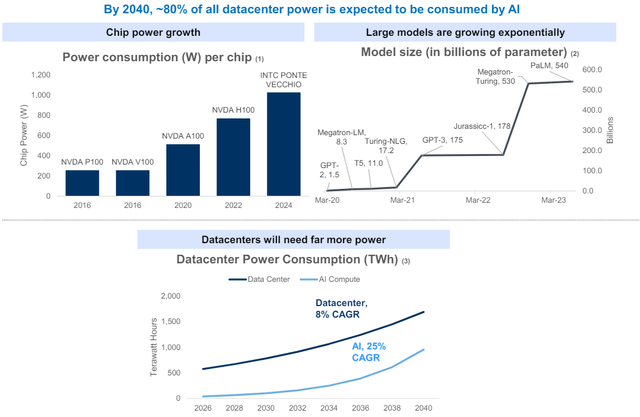

The advent of generative AI solutions this year has essentially driven up compute costs for operators. Paired with ongoing macroeconomic uncertainties, performance and cost optimization have been at the forefront of budgeting and decision-making processes in the board room. Recent research estimates that complex AI workloads are approximately 5x more expensive to run than traditional cloud workloads. This is primarily driven by their higher compute power demands, and, inadvertently, energy required. AI-related power consumption in the data center is estimated to rise at a 25% CAGR over the next two decades. Despite anticipated efficiency gains realized over time from technology and hardware improvements, AI workloads are expected to drive 80% of data center power consumption by 2040.

RBC Capital Markets

And this is where Emerald Rapids’ differentiation comes in. In addition to improving its foray in emerging AI opportunities, Intel also seeks to address customers’ growing optimization needs. We view Intel’s go-to-market strategy for Emerald Rapids as a two-parter. The first is to capture interest and demand through competitive performance and total cost of ownership (“TCO”) considerations. And the second is to preserve longer-term demand to ensure sustained growth at scale on related AI opportunities.

On the first part, Intel has optimized Emerald Rapids for processing AI workloads, which allows it to better partake in TAM-accretive opportunities stemming from the AI-first compute era. The newest server processor by Intel is expected to bring a 21% performance gain in general purpose computing, and 36% perf/watt improvement compared to Sapphire Rapids. And enterprise customers looking to partake in the typical five-year upgrade cycle could be facing TCO reductions by as much as 77%.

These are impressive cost and performance improvements compared to both its predecessor and competing server processors currently available in the market. Key customers, including IBM (IBM), have also provided validation to Emerald Rapids’ performance gains, citing 2.7x better query throughput achieved on its watsonx.data platform during testing. Price and performance gains observed by Google Cloud (GOOG / GOOGL) on cloud instances based on Sapphire Rapids have also encouraged the hyperscaler’s deployment of Emerald Rapids instances in 2024. This highlights the critical role of not just competitive performance, but also TCO considerations in capturing incremental market share within the growing AI industry.

On the second part pertaining to the preservation of market share, Emerald Rapids is focused on AI model inferencing. Inference refers to the process of running generative AI solutions/software already deployed, such as generating output on your day-to-day ChatGPT queries. Emerald Rapids is capable of 14x better inference performance than 3rd Gen Xeon Ice Lake and 42% better than Sapphire Rapids. This is an opportunity for Intel to partake in longer-term opportunities underpinned by the expanded deployment and adoption of generative AI solutions, while also facilitating current model training demands at scale.

However, Intel’s step-up in data center CPUs is shipping into an industry that is rapidly shifting to accelerated computing to facilitate high-performance computing and AI workloads. More than $1 trillion of CPU-based data centers built over the past four years face the impending upgrade cycle to accelerated computing. And this inevitable overhaul has been accelerated by the emergence of generative AI as the “primary workload of most of the world’s data centers” going forward. Although Emerald Rapids is inherently optimized for AI workloads, it only partially mitigates the risk of technological inferiority and market share loss.

Uncertainties in Accelerated Computing

This again shifts focus to Intel’s developments in accelerated computing – particularly on Gaudi 3. We believe Gaudi 3 remains a key area of interest for investors when it comes to Intel’s foray in data center and AI (“DCAI”). This follows the outburst of demand for Nvidia’s H100 accelerator chips this year, which has essentially single-handedly propelled the chipmaker’s valuation into the trillion-dollar club.

However, the Gaudi 3 had received little airtime during the AI Everywhere keynote event, despite its mission critical role in propelling Intel’s AI pursuit in the data center. Other than Intel’s reiterated commitment to the 2024 shipping timeline, and a brief showcase of the “first ever Gaudi 3 in public”, management provided little detail on the new accelerator’s pipeline or performance specs.

While Gaudi 3 aims at competing against Nvidia’s H100 with up to a 50% TCO advantage on training LLMs like GPT-3, Nvidia is already preparing for its next-generation chips in 2024. This includes the H200, which reduces LLM TCO and energy costs by at least 50%, while also doubling the inference speed relative to its predecessor. The leader in AI accelerators has also touted the next-generation Blackwell architecture B100 GPUs, which will drive further exponential breakthrough in inference performance and TCO.

The H200 also complements Nvidia’s most advanced GH200 Grace Hopper Superchip currently available for access through its DGX GH200 system. Meanwhile, AMD has also recently unveiled the competitive specs of its latest Instinct MI300 accelerators that will begin shipping next year. With AI hardware demand rapidly ballooning into a $400+ billion opportunity by the end of the decade, Gaudi 3 represents a key piece to Intel’s data center and AI aspirations. This accordingly makes the Gaudi 3’s upcoming go-to-market a key driver to the stock’s next leg of upside in our opinion.

Differentiation in AI Client Computing

Meanwhile, we view Intel’s growing focus on AI PC opportunities as a prudent strategy in differentiating its competitive advantage. Much of the stock’s 73% upsurge this year was realized over the past six months on increasingly tangible signs of a PC market recovery. This steepening upsurge is likely reflective of the client computing segment’s critical role within Intel’s business model for driving pent-up value in the stock. Intel remains the leader in CPU sales, particularly in the PC market. Intel’s client computing segment currently accounts for more than half of its consolidated sales.

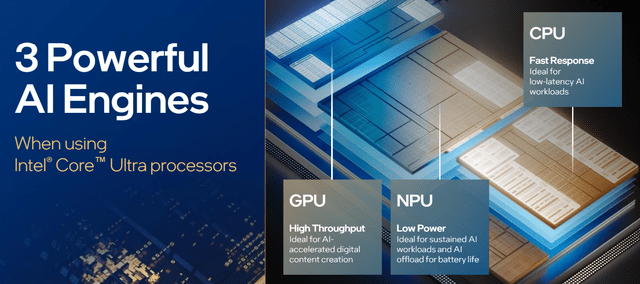

We believe it is prudent for Intel to hone in on growing AI PC sentiment with its recent launch of the Intel Core Ultra CPU – or previously known as “Meteor Lake”. Core Ultra is Intel’s first chip made based on the Intel 4 process node. The key differentiation for Intel’s latest and greatest client computing processor is its built-in AI acceleration capabilities.

By combining a neural processing unit (“NPU”) with a CPU and GPU, the Core Ultra is able to efficiently allocate compute power in processing big and small tasks. Specifically, NPUs are optimized for processing AI workloads, which essentially frees up the CPU and GPU for other tasks.

Intel AI Everywhere presentation

The Core Ultra is also Intel’s first client computing solution with “3D chip stacking”, enabled by its proprietary “Foveros 3D” packaging capability. This essentially allows the chiplets within the Core Ultra processor to be stacked vertically, rather than horizontally, and enable “greater performance in a smaller footprint”. The combination enables greater power efficiency by reducing the chiplets’ reliance on one another to operate, and allowing portions to be “shut off” when idle. This has been key to enhancing the battery life of Core Ultra PCs to a maximum of 12.5 hours per charge, helping bridge the gap between Windows laptops and MacBooks powered by Apple silicon (AAPL).

We believe Intel’s emphasis on AI PC opportunities will yield a favourable payout given its first-mover advantage. The strategy could even compensate for some of Intel’s technological inferiority to peers in the DCAI product roadmap, and reinforce its share grab of emerging AI opportunities. Recent industry research expects 80% of the global PC market to be AI-optimized over the next five years, highlighting the emerging opportunity for Intel.

The transition will be primarily driven by the burgeoning deployment and end-user adoption of generative AI use cases on the PC – starting with the most ubiquitous chatbots and copilot assistants today. And Intel is already working on the build-out of its AI PC ecosystem to reinforce share gains on this front. The company has partnered with over 100 independent software vendors to create more than 300 AI-accelerated PC features uniquely optimized for Core Ultra PCs deploying through 2024.

Intel AI Everywhere presentation

According to Intel, the number of Core Ultra AI PC applications and frameworks deploying in 2024 alone is already triple the volume of what is currently offered by any competing chipmaker. Intel Core Ultra will be incorporated into more than 230 upcoming models from the world’s top PC makers, with some already available for purchase today. In addition to complementing the recovering PC market, Core Ultra PCs’ go-to-market timeline also coincides with the latest holiday shopping season.

We believe the Core Ultra reinforces Intel’s continued leadership in client computing. It provides Intel with a first-mover advantage against emerging competition from both chipmakers and key customers that have been prioritizing the development of in-house silicon.

But the jury is still out on differentiating Core Ultra AI PCs from what is currently available in the market. Preliminary user reviews have been mixed. While some have indicated outperformance against the latest M3 MacBook Pro 14” in various CPU benchmark tests, others have sighted nominal differences between Core Ultra and regular PCs on performing generative AI tasks. Improvements on graphics were also mostly summarized as “subtle”. This is likely due to the lack of AI PC use cases, other than chatbots and copilots that most regular Windows laptops can still easily handle. The situation highlights the urgency for Intel to build-out its broader AI PC ecosystem needed to optimize Core Ultra’s potential.

Instead, we believe Core Ultra is well-positioned to benefit first from the impending upgrade cycle in commercial settings before gaining mass market adoption. The enterprise IT environment is likely to be the first in implementing more complex AI tasks designed for PC. These include emerging AI-enabled productivity software like Microsoft 365 Copilot (MSFT) and industry-specific tools like Salesforce’s (CRM) EinsteinGPT suite, as workplace settings adapt to the nascent technology.

Valuation Considerations

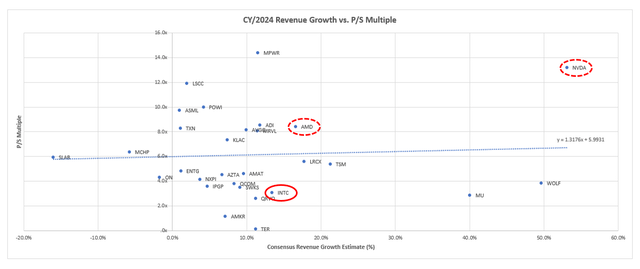

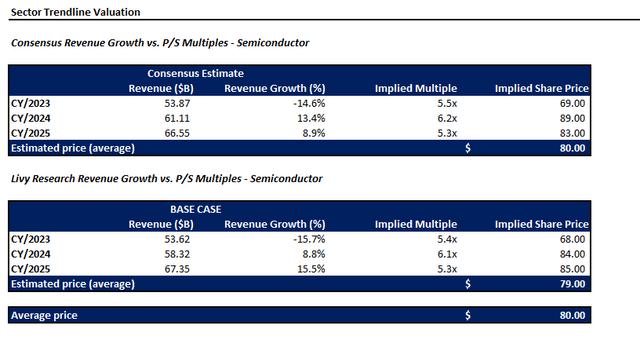

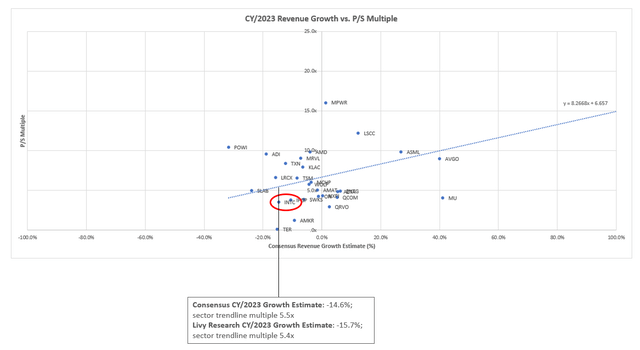

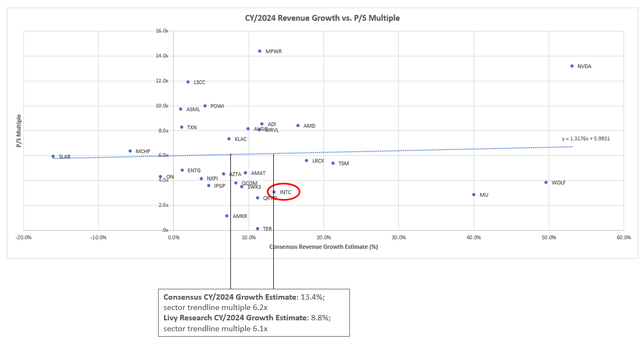

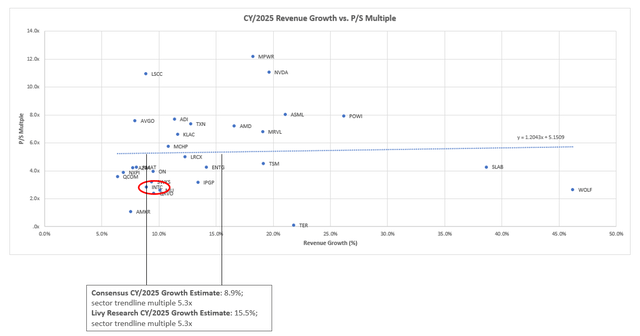

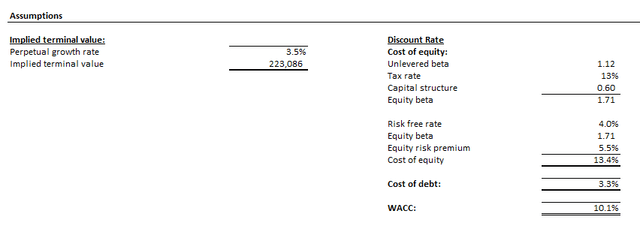

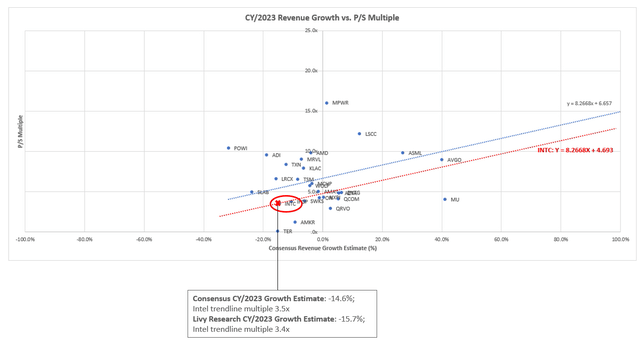

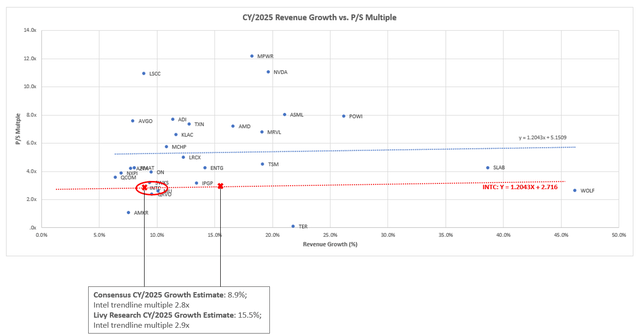

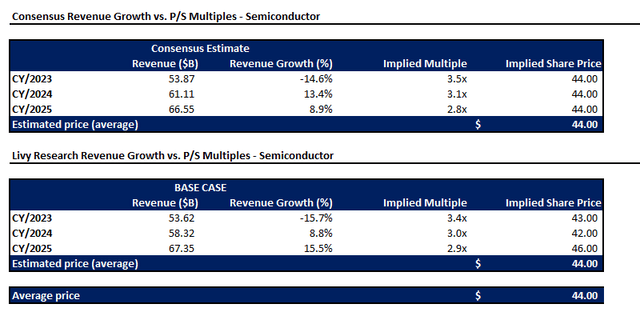

Considering the following table of comparable multiples observed across the Philadelphia Semiconductor Index (SOX) constituents, Intel’s leading competitors AMD and Nvidia are trading above the sector trendline.

Despite Intel’s competitive expertise in AI, server and PC processors, it is trading below the sector trendline instead. We believe this discount to peers is reflective of the price attributable to company-specific execution risks on Intel’s IDM 2.0 strategy. Specifically, the capital-intensive portion of Intel’s foundry ambitions remains one of the biggest limiting factors to the stock. The elevated investment outlay, paired with its expensive ramp-up continues remains unfavourable to Intel’s capital structure amid a high capital cost environment.

Data from Seeking Alpha

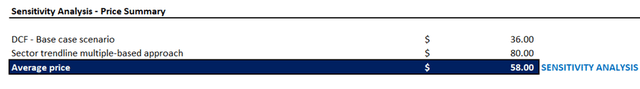

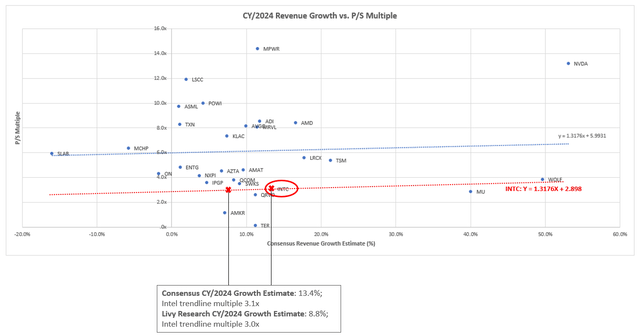

Intel’s AI Sensitivity Analysis

However, the emerging AI PC opportunity, paired with Intel’s recent developments in data center processors, can play a fundamental role in narrowing the valuation gap. With consistent delivery of progress on the roll-out of its product and foundry roadmap stipulated in the IDM 2.0 strategy, alongside tangible fundamental improvements, the stock could trade closer to peer levels at $80.

Author

The price is determined by lifting Intel’s valuation at current levels to the sector average relative to its forward growth prospect stipulated in our previously discussed base case projections.

Data from Seeking Alpha

Data from Seeking Alpha

Data from Seeking Alpha

We believe the stock should at least trade at $58 under the sensitized scenario where Intel’s revamped product roadmap fully materializes. This would represent upside potential of 27% from the stock’s current trading levels.

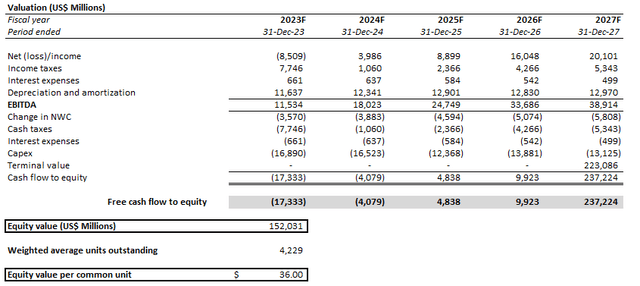

The $58 price generated from our sensitivity analysis equally weighs the $80 outcome from the sector trendline multiple-based approach and $36 outcome from the discounted cash flow approach (further discussed in later sections).

Author

We believe this combination adequately reflects the reduction to company-specific execution risks attributable to Intel’s capital-intensive IDM 2.0 strategy, while also considering the estimated intrinsic value to its longer-term cash flows. Any incremental upside potential from there would depend on Intel’s ability in monetizing completed product roadmap.

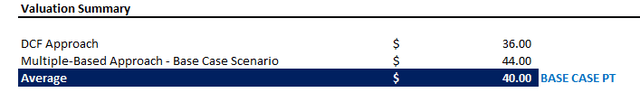

Base Case Validation Considerations

In the meantime, we remain incrementally cautious of Intel’s execution risks. Admittedly, Intel’s consistent positive progress this year lessens concerns that intensifying competition and the rapidly evolving technological landscape could disrupt its IDM 2.0 roadmap. However, significant hurdles remain on whether the capital-intensive turnaround could yield a meaningful return. And the combination of an uncertain PC market recovery, emerging evolution in CPU-based data centers, and capital-intensive foundry roadmap amid tightening financial conditions have only added complexity to the matter.

As Intel remains in progress of building, and not yet ramping monetization, of its IDM 2.0 strategy, we are reverting back to the conservative valuation approach for the stock. Our revised base case price target of $40 is derived by blending results from the discounted cash flow and multiple-based valuation methods. This compares to our previous base case price target of $34.

Author

We believe the blended valuation approach is a better gauge of Intel’s estimated intrinsic value based primarily on its fundamental performance, while also taking into consideration the impact of broader market sentiment typically priced into comparable peer multiples. The upward price revision is primarily driven by adjustments to the discount rate to reflect recent shifts in the macroeconomic backdrop.

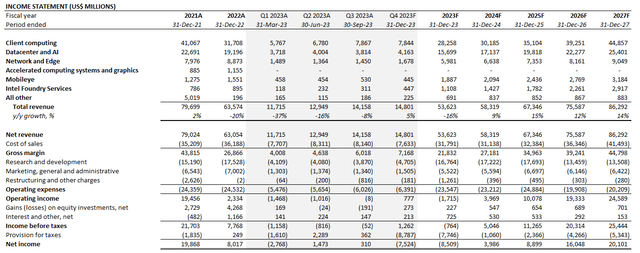

Author

As discussed in the foregoing analysis, we believe the products unveiled at Intel’s AI Everywhere keynote last week reinforces its near-term growth outlook. However, it is unlikely they will drive substantial outperformance over what has already been considered in our previous base case fundamental projections. Specifically, we view the recently launched Emerald Rapids, Gaudi 3, and Core Ultra as reinforcements, and not accretive, to anticipated growth in the near-term. As a result, we have kept our fundamental projections for Intel unchanged in response to the latest product launch event.

Author

Intel_-_Forecasted_Financial_Information.pdf

Under the DCF approach, we have considered cash flow projections taken in conjunction with the base case fundamental forecast. The key valuation assumptions applied remain largely unchanged from our previous coverage, aside from reducing Intel’s discount rate from 10.4% to now 10.1%. This is primarily to account for the recent drop in the risk-free rate assumption that is benchmarked to the 10-year Treasury yield. Our risk-free rate assumption has fallen from the previously 4.5% to 4%, as Treasuries rose on the Fed’s latest outlook on monetary policy given easing inflationary pressures. We believe the DCF analysis is a clean reflection of Intel’s estimated intrinsic value based solely on its fundamental prospects.

Author

Author

Under the multiple-based approach, we have considered Intel’s current valuation trendline in parallel to the sector trendline presented in the earlier section. The corresponding multiple on Intel’s valuation trendline based on its growth prospects is applied to our fundamental projections for the company.

Data from Seeking Alpha

Data from Seeking Alpha

Data from Seeking Alpha

We believe this multiple-based valuation approach is an appropriate reflection of market confidence in Intel on a relative basis to the broader chip sector, which has been a key beneficiary of the AI premium this year.

Author

The Bottom Line

The AI Everywhere keynote effectively reduces risks pertaining to Intel’s near-term technology roadmap as it continues to progress as expected. However, Intel has yet to experience a material shift that would be differentiating of its market position in ushering-in the new era of AI-first compute. We believe management’s focus on AI PC opportunities is prudent and critical to reinforcing its market leadership in this foray. It also helps Intel defend is moat against the growing chorus of customers’ in-house silicon developments and intensifying competition from rival chipmakers like Nvidia, AMD, and, more recently, Qualcomm (QCOM).

As much as we believe the AI Everywhere keynote was a step in the right direction, we do not believe Intel is at an inflection point yet. With its AI PC ecosystem still in early build-out phase, the extended monetization timeline does little in reducing near-term execution risks. Meanwhile, the company also remains in catch-up mode pertaining to DCAI opportunities. This accordingly provides limited near-term relief to profit and cash flow margins critical to supporting the capital-intensive foundry ambitions set out in its IDM 2.0 roadmap. Although the AI Everywhere keynote reinforces confidence in Intel’s prospects, it brings about limited near-term upside potential to the stock. We do not think it will be too late to wait until Gaudi 3’s official launch to determine if Intel has really reached a positive inflection on its IDM 2.0 strategy. Until Gaudi 3 – the key focus for investors – starts shipping, the stock’s near-term performance will likely remain a function of macroeconomic conditions in tandem with the broader market

Read the full article here