- Ad agencies are launching initiatives to label AI-generated influencers and other content.

- Many creator-economy insiders think regulation and labeling of AI is necssary.

- But others warn it disclosure might be difficult to enforce.

AI has already impacted many aspects of the creator economy, from helping with idea generation to automating editing tasks and creating virtual characters. Over 90% of creators use AI regularly, two recent surveys found, and brands are even encouraging them to make the most of these tools.

As the use of AI grows, some influencer-marketing agencies are launching initiatives to increase transparency around its presence in their campaigns.

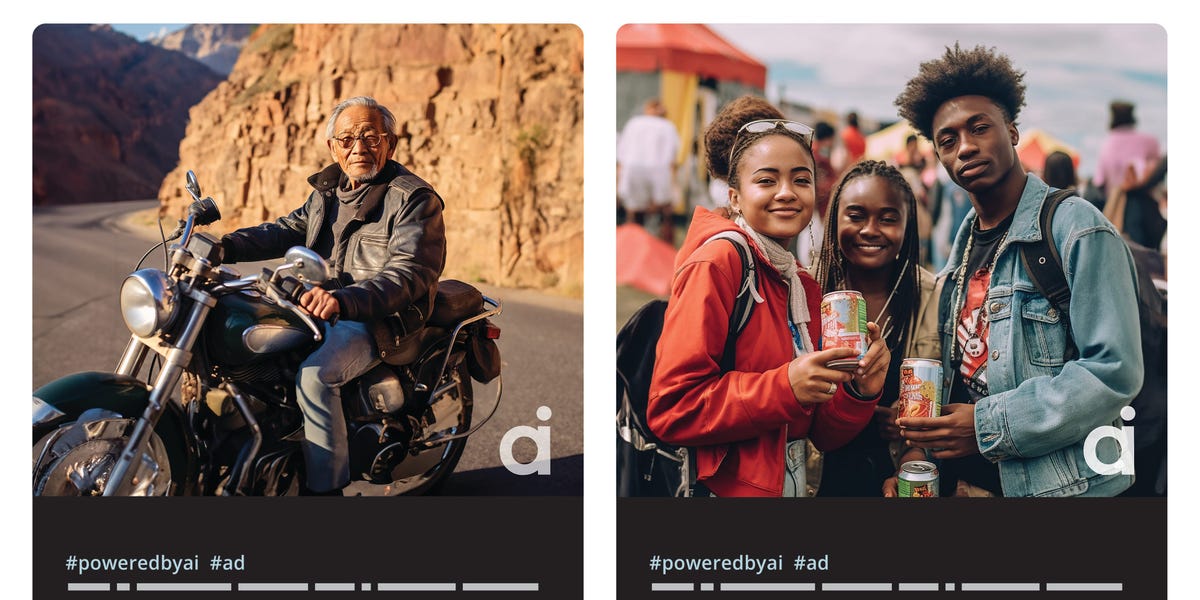

WPP-owned ad agency Ogilvy recently made a move in this direction. Ogilvy influencer-marketing campaigns that use virtual or AI-generated influencers will be accompanied by a hashtag #poweredbyAI and a watermark.

Ogilvy also launched an “AI Accountability Act” to push other advertising, PR, and social-media companies to mandate the disclosure of AI-generated creators. The ultimate goal is to get platforms to introduce a label similar to current “paid partnership” tags for sponsored content.

As AI-generated characters multiply and appear strikingly similar to real people — Ogilvy itself is behind an AI-generated version of Bollywood superstar Shah Rukh Khan, and Brazilian virtual creator Lu — there’s a rising need for disclosure, industry insiders said.

“It won’t be long before people are indistinguishable from avatars,” said Cameron Ajdari, founder of digital-talent-management firm Currents.

It’s not just about AI-generated characters, however. According to a survey from influencer-marketing agency Izea, 86% of internet users believe that AI-generated content broadly should be disclosed.

Izea also created a tool to enable programmatic labeling and disclosure of AI-generated content, including ChatGPT-generated text, although it won’t mandate its use.

And regulating AI disclosure might be easier said that done, industry insiders said, as AI might soon pervade nearly all aspects of content creation.

Many said AI use should be disclosed, especially when it comes to virtual influencers

Several creators Insider spoke with, including DYI creator Emma Downer, said that disclosing AI use is similar to disclosing a paid partnership, and that mandating disclosure is necessary to avoid eroding the basis of the relationship between a creator and their audience: trust.

“Marketing is built on a foundation of trust, that you aren’t being lied to or misled into doing something that you otherwise wouldn’t,” Downer said. “We want to make informed choices.”

When it comes to virtual influencers and characters, disclosure is even more important, particularly when it becomes “hard to distinguish between the human element and graphics,” said Cynthia Ruff, the founder of creator-economy startup Hashtag Pay Me.

“I absolutely think disclosure is necessary if marketers plan on using AI-generated images, likeness, or voices for marketing purposes,” she said. “We already see US politicians deep-faking their opponents in smear campaigns today and likely have a family member who sends it to us thinking it’s real.”

As AI advances, is regulation even possible?

Other creator industry insiders have mixed opinions about whether regulation makes sense, given how fast the AI space moves.

“We seem to be jumping decades in just weeks, going from 8-bit characters to fully rendered human beings,” said Lia Haberman, an influencer-marketing professor at UCLA Extension and marketer. “But as AI gets integrated into everything we do online, #PoweredByAI may need to be reimagined to cover the broader use of AI in campaigns and digital work in general. There’s a very fine line between AI-generated virtual influencers and AI-assisted real influencers, so where does the labeling begin and end?”

And mandating disclosure at a large scale is difficult, especially when it comes to building legislation around it.

“I’m not bullish on it happening anytime soon,” said Avi Gandhi, the founder of consulting firm Partner with Creators. “US Congress couldn’t even ask a cogent question to the CEO of TikTok. Regulating AI is well beyond their capacity, sadly.”

On the platform side, it’s unclear if or when any steps will be taken to label AI content.

TikTok declined to comment for this story, but a May report from The Information showed that the company appeared to be developing a system to disclose AI-generated video. Snapchat and Pinterest also declined to comment for this story, while YouTube and Meta didn’t respond to a request for comment.

Given the speed at which AI moves and its pervasiveness, creator Alasdair Mann said he thinks reverse labeling might actually make more sense.

Mann himself has been using artificial intelligence for months to generate images, content ideas, and templates.

For Mann, watermarks may end up not saying “made by AI,” but rather “AI-free,” or “human-made.”

“AI content is so easy to mass produce and improving so rapidly that we will struggle with labeling all the AI content out there, it will be easier to label what isn’t made by AI,” he said.

Read the full article here