- Adobe is selling AI-generated images that depict the Israel-Hamas war in varying degrees of realism.

- One of the images has been shared online by the public without a clear indication that it’s fake.

- Misinformation about the Israel-Hamas conflict has already been rampant online.

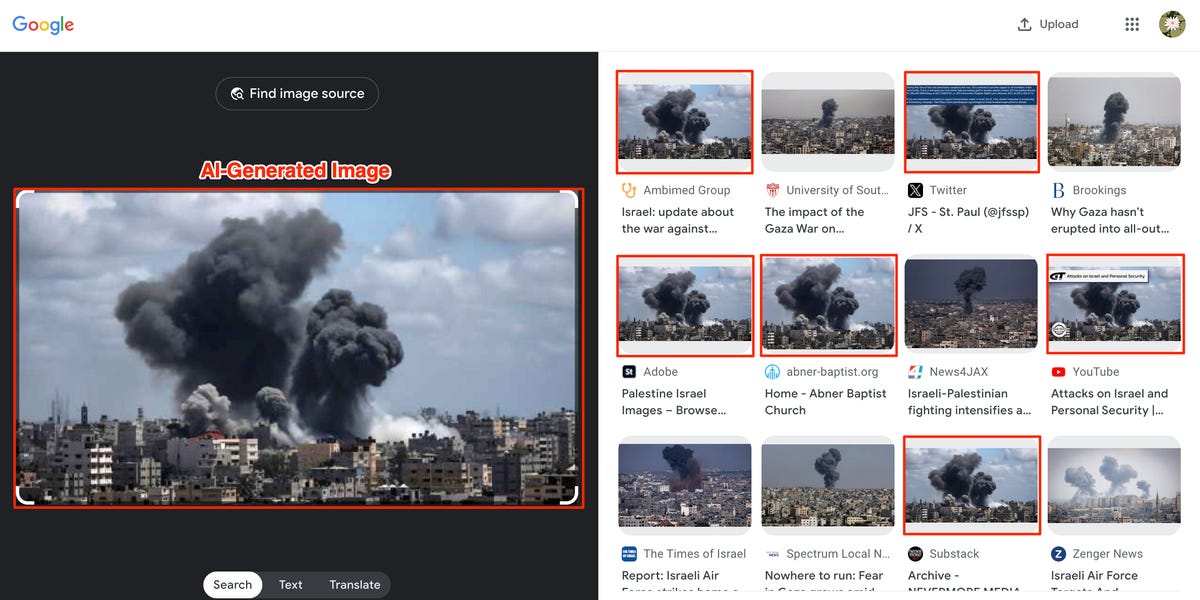

Adobe is selling AI-generated images depicting the Israel-Hamas war. While some are pretty obviously computer generated, others are more realistic, including one image that has been shared online by some smaller websites and in social media posts — drawing concerns the AI-generated content could contribute to misinformation, Australian outlet Crikey and Vice’s Motherboard reported.

A search for “Israel Hamas war” in Adobe Stock reveals a slew of images showing war-torn streets, explosions, soldiers, tanks, and buildings on fire, and children standing in rubble.

Adobe Stock, which sells images submitted by individual artists, requires that all AI-generated images on the platform be labeled as such. But some of the images for sale are marked as AI-generated only in the fine print, not in their titles.

“Large explosion illuminating the skyline in Palestine,” the title of one AI-generated image reads. “Buildings destroyed by war in the Gaza Strip in Israel,” reads another. An image showing a woman in distress is titled “Wounded Israeli woman clings to military man, begging for help.”

Some of the image titles do mention AI. One AI-generated image, titled “conflict between israel and palestine generative ai,” looks similar to actual images from the war and was shared online.

And a Google reverse-image search for the image reveals shows instances where it’s been used across the internet in posts, videos, and on social media alongside the original Adobe link. The search also results in other very similar, presumably real images from the conflict. It’s unclear whether those who used the AI-generated image on their websites or in their social media posts were aware that it isn’t a real photo.

“These specific images were labeled as generative AI when they were both submitted and made available for license in line with these requirements,” an Adobe spokesperson said in a statement to Insider. “We believe it’s important for customers to know what Adobe Stock images were created using generative AI tools.”

“Adobe is committed to fighting misinformation, and via the Content Authenticity Initiative, we are working with publishers, camera manufacturers and other stakeholders to advance the adoption of Content Credentials, including in our own products,” the statement continued. “Content Credentials allows people to see vital context about how a piece of digital content was captured, created or edited including whether AI tools were used in the creation or editing of the digital content.”

Misinformation and disinformation about the Israel-Hamas war is already rampant online. Misleading content, old videos and photos from other conflicts in other parts of the world, and even video game footage has been presented as real photos from the still-unfolding conflict.

And as AI-generated content becomes both more realistic and more widespread, it can be difficult to discern whether an image is real or not, especially when even AI image detectors can be easy to fool.

And, as is the case with AI-generated images — it’s much harder to police the labeling of an AI image after it’s been downloaded by a user. After all, there’s no guarantee someone will clearly note the image’s origin or that it’s AI.

Henry Ajder, an AI expert who is on the European advisory council for Meta’s Reality Labs, previously shared a few tips with Insider to help distinguish AI-generated images from real ones. AI images can often look “plasticky” or overly-stylized, and might have aesthetic inconsistencies in their lighting, shapes, or other details.

Ajder also suggests asking questions when an image seems a little too sensationalized, like “Who’s shared it?” “Where has it been shared?” and “Can you cross-reference it?” He also suggests doing a reverse image search to find an image’s context.

Read the full article here